Doctor-investigators at Beth Israel Deaconess Medical Heart (BIDMC) in contrast a chatbot’s probabilistic reasoning to that of human clinicians. The findings, revealed in JAMA Community Open, counsel that synthetic intelligence may function helpful medical resolution help instruments for physicians.

“People wrestle with probabilistic reasoning, the apply of constructing choices primarily based on calculating odds,” stated the research’s corresponding writer, Adam Rodman, MD, an inner drugs doctor and investigator within the Division of Medication at BIDMC.

“Probabilistic reasoning is one among a number of parts of constructing a analysis, which is an extremely advanced course of that makes use of quite a lot of totally different cognitive methods. We selected to judge probabilistic reasoning in isolation as a result of it’s a well-known space the place people may use help.”

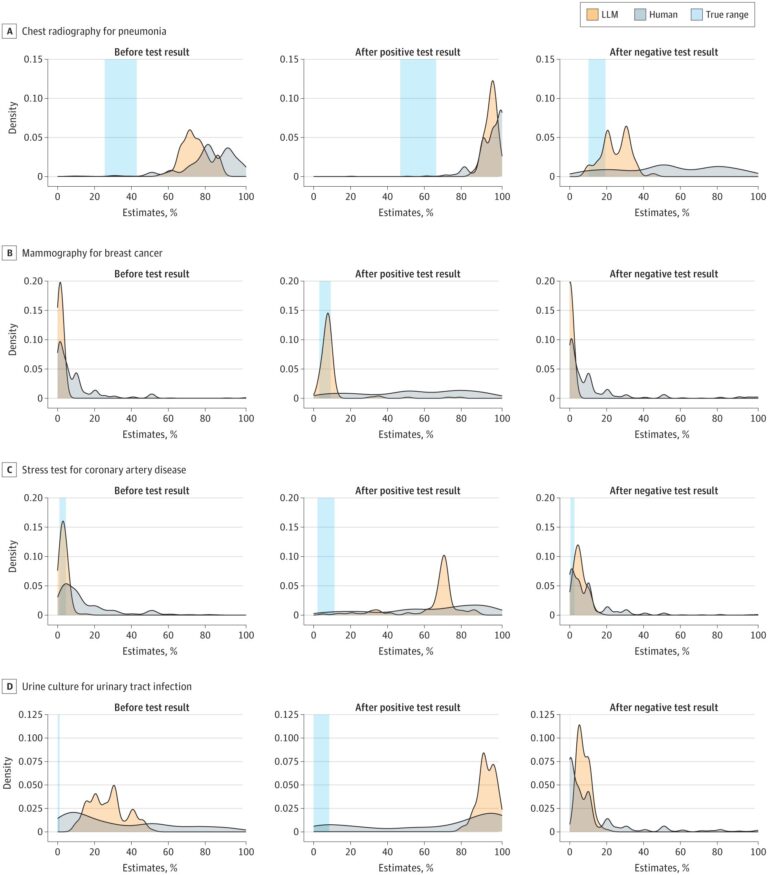

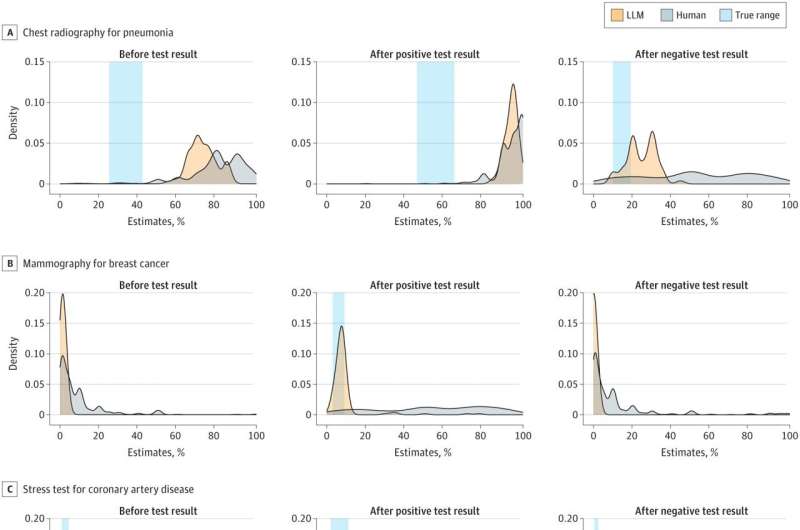

Basing their research on a beforehand revealed nationwide survey of greater than 550 practitioners performing probabilistic reasoning on 5 medical circumstances, Rodman and colleagues fed the publicly out there Massive Language Mannequin (LLM), Chat GPT-4, the identical collection of circumstances and ran an similar immediate 100 occasions to generate a spread of responses.

The chatbot—similar to the practitioners earlier than them—was tasked with estimating the chance of a given analysis primarily based on sufferers’ presentation. Then, given check outcomes similar to chest radiography for pneumonia, mammography for breast most cancers, stress check for coronary artery illness, and a urine tradition for urinary tract an infection, the chatbot program up to date its estimates.

When check outcomes had been constructive, it was one thing of a draw; the chatbot was extra correct in making diagnoses than the people in two circumstances, equally correct in two circumstances and fewer correct in a single case. However when checks got here again unfavorable, the chatbot shone, demonstrating extra accuracy in making diagnoses than people in all 5 circumstances.

“People typically really feel the danger is larger than it’s after a unfavorable check end result, which might result in overtreatment, extra checks, and too many drugs,” stated Rodman.

However Rodman is much less desirous about how chatbots and people carry out toe-to-toe than in how extremely expert physicians’ efficiency would possibly change in response to having these new supportive applied sciences out there to them within the clinic, added Rodman. He and his colleagues are trying into it.

“LLMs cannot entry the surface world—they don’t seem to be calculating chances the way in which that epidemiologists, and even poker gamers, do. What they’re doing has much more in widespread with how people make spot probabilistic choices,” he stated.

“However that is what is thrilling. Even when imperfect, their ease of use and skill to be built-in into medical workflows may theoretically make people make higher choices,” he stated. “Future analysis into collective human and synthetic intelligence is sorely wanted.”

Extra info:

Adam Rodman et al, Synthetic Intelligence vs Clinician Efficiency in Estimating Chances of Diagnoses Earlier than and After Testing, JAMA Community Open (2023). DOI: 10.1001/jamanetworkopen.2023.47075

Quotation:

AI chatbot exhibits potential as diagnostic associate, researchers discover (2023, December 11)

retrieved 11 December 2023

from https://medicalxpress.com/information/2023-12-ai-chatbot-potential-diagnostic-partner.html

This doc is topic to copyright. Aside from any truthful dealing for the aim of personal research or analysis, no

half could also be reproduced with out the written permission. The content material is supplied for info functions solely.